Experienced – Passionate – Dedicated – Professional

our software testing techniques

Discover how Eonix Technologies guarantees excellence with advanced software quality assurance techniques. Our commitment to precision and reliability ensures top-tier performance in every solution we deliver.

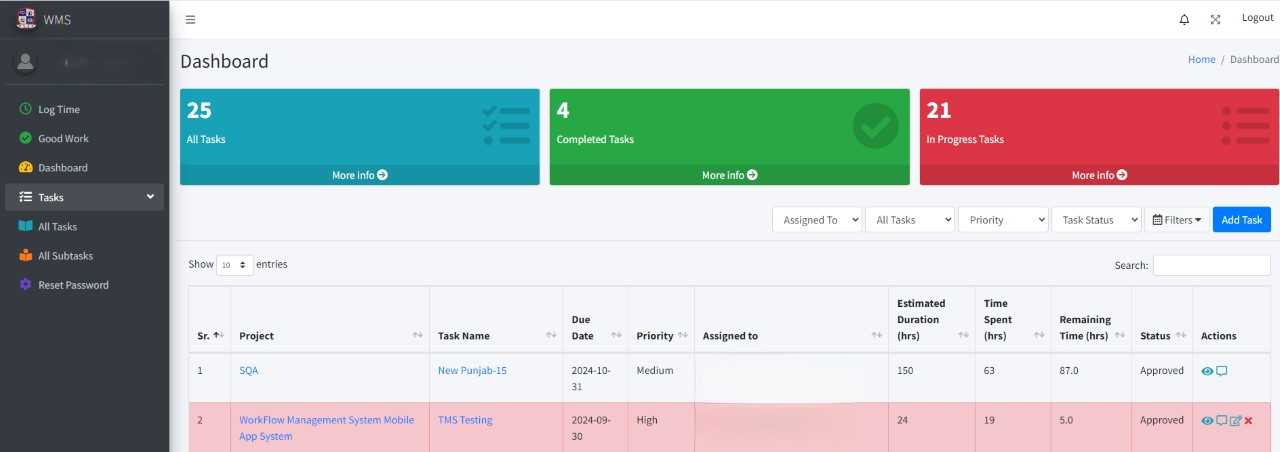

Work Flow Management System

Here are the points for describing the Workflow Management System testing process and related documentation:

-

Manual Testing: Conducted extensive manual testing to identify functional issues, user interface inconsistencies, and workflow anomalies. Ensured that all user interactions met the system requirements and performed as expected across different scenarios.

-

Automated Testing: Implemented automated testing scripts to validate the workflow processes and ensure regression testing was efficient. Automated tests covered critical functionalities, including task creation, notifications, and approval workflows.

-

Bug Tracking Sheets: Maintained detailed bug tracking sheets to document all identified issues, including their severity, steps to reproduce, and resolution status. Regularly updated the sheets to reflect the progress and status of bug fixes.

-

Test Cases Documentation: Created comprehensive test cases to cover all aspects of the system, including functional testing, integration testing, and user acceptance testing (UAT). Documented expected outcomes and compared them with actual results during test execution.

-

Performance Testing: Assessed the system’s performance under various load conditions to identify potential bottlenecks. Conducted stress testing to ensure the system could handle high traffic without significant degradation.

-

User Feedback Incorporation: Collected feedback from end-users during UAT to identify usability issues and incorporated necessary adjustments based on their input.

-

Reporting and Analysis: Generated test reports summarizing the testing results, bug trends, and system stability over time. These reports were used to inform development teams and stakeholders about the system’s readiness for deployment.

Facial Recognition System

Here are the points for describing the testing and documentation process for the Facial Recognition System:

-

Manual Testing: Conducted manual testing to evaluate the accuracy and reliability of the facial recognition algorithm under various lighting conditions, angles, and facial expressions. Verified user identity matching and false acceptance/rejection rates.

-

Automated Testing: Employed automated scripts to simulate different facial recognition scenarios, ensuring the system’s consistent performance. Automated tests covered edge cases such as partial obstructions, low-resolution images, and multiple faces in a single frame.

-

Bug Tracking Sheets: Maintained detailed bug tracking sheets to log issues related to image processing, algorithm accuracy, and integration with other modules. Recorded the severity of each bug, steps to reproduce, and status updates throughout the testing lifecycle.

-

Test Cases Documentation: Developed a set of test cases focusing on different use cases, including user enrollment, face matching, liveness detection, and system alerts. Documented the expected versus actual results to measure system performance.

-

Performance Testing: Conducted load testing to measure the system’s response time and recognition speed under high volumes of image data. Assessed the impact of multiple concurrent users on the system’s performance.

-

Data Privacy and Security Assessment: Evaluated the system’s data handling processes to ensure compliance with privacy regulations. Conducted tests to confirm the secure storage and transmission of facial recognition data.

-

User Feedback Incorporation: Collected feedback from real users during pilot testing phases, focusing on user experience and system accuracy. Made iterative improvements to the algorithm based on this feedback.

-

Reporting and Analysis: Produced detailed test reports to summarize findings, including recognition accuracy metrics, bug statistics, and system performance trends. These reports helped guide further development and optimization efforts.

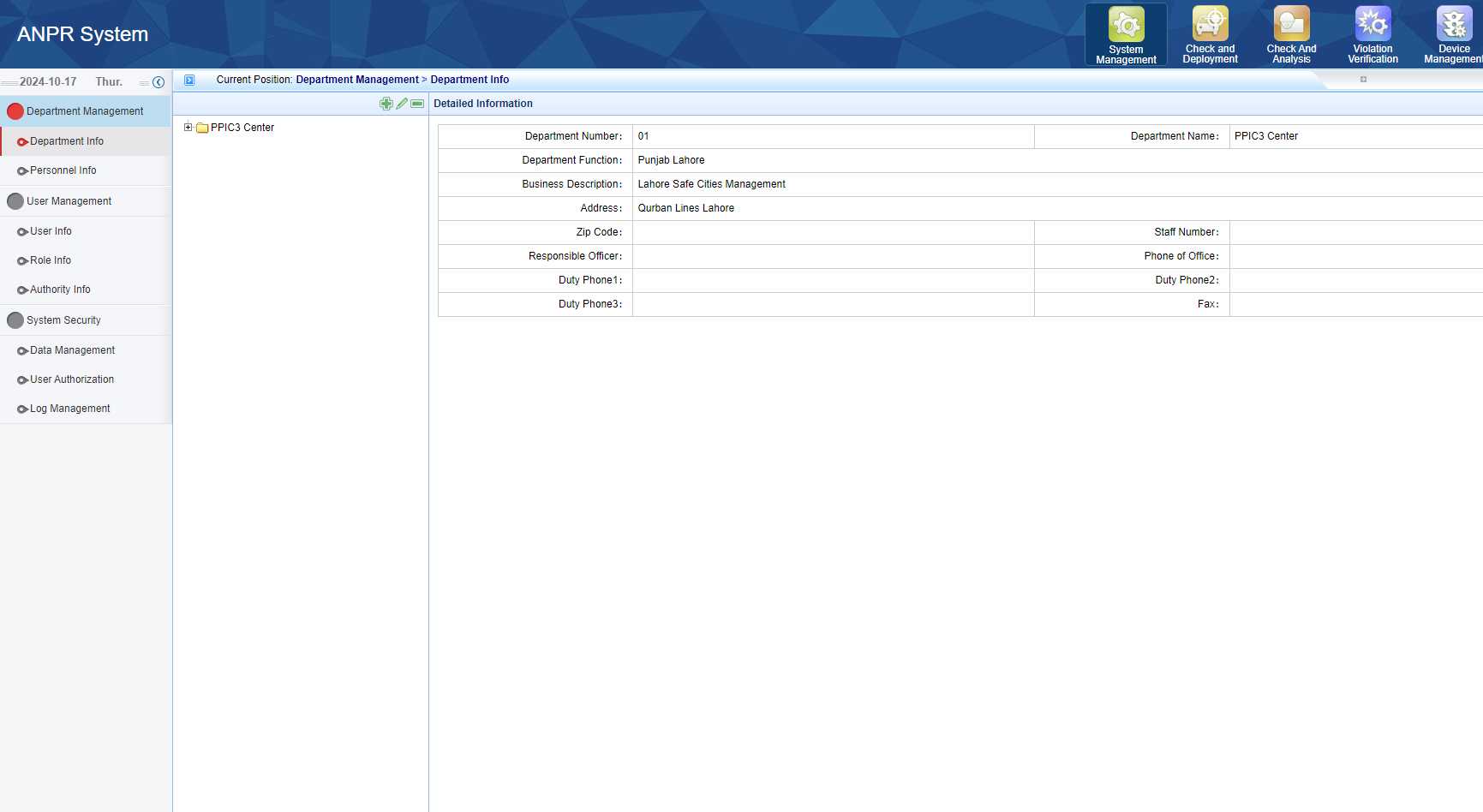

Autometic Number Plate Recognition System

Here are the points for describing the testing and documentation process for the Automatic Number Plate Recognition (ANPR) System:

-

Manual Testing: Performed manual testing to evaluate the system’s ability to accurately detect and recognize vehicle number plates in various conditions, such as different lighting, weather, and motion. Verified the system’s recognition accuracy for plates with different fonts, sizes, and layouts.

-

Automated Testing: Utilized automated testing scripts to simulate a range of scenarios, including high-speed vehicle movement, occluded plates, and different plate angles. Automated tests helped ensure the system’s consistency and robustness in real-world conditions.

-

Bug Tracking Sheets: Created detailed bug tracking sheets to document any issues found during testing, such as misrecognition, false positives/negatives, and integration problems. Tracked the severity, reproduction steps, and resolution status for each bug.

-

Test Cases Documentation: Developed comprehensive test cases to cover different aspects of the system, including image capture, plate detection, optical character recognition (OCR), and database matching. Compared the expected and actual results for each test case to validate the system’s accuracy.

-

Performance Testing: Conducted load testing to evaluate the system’s performance under various traffic densities and vehicle speeds. Assessed the impact of processing multiple images simultaneously on system response times and accuracy.

-

Integration Testing: Tested the integration of the ANPR system with other systems, such as databases, traffic management, and law enforcement tools. Ensured seamless data exchange and accurate alerts for detected violations.

-

Environmental Condition Testing: Evaluated the system’s performance in different environmental conditions, such as low light, rain, fog, and glare. Analyzed the effects of these factors on plate recognition accuracy and made necessary adjustments.

-

User Feedback Incorporation: Collected user feedback during field testing to identify practical issues in real-time use. Improved the system based on suggestions regarding user interface, alert accuracy, and operational efficiency.

-

Reporting and Analysis: Generated test reports summarizing the accuracy rates, system performance metrics, bug trends, and overall stability. These reports provided insights for further system enhancements and fine-tuning.

Traffic Management System

Here are the points for describing the testing and documentation process for the Traffic Management System:

- Manual Testing: Conducted manual testing to validate the system’s functionality, including traffic signal control, vehicle counting, and congestion detection. Verified that all features met operational requirements and performed correctly under different traffic conditions.

- Automated Testing: Used automated testing scripts to simulate various traffic scenarios, such as peak-hour congestion, accidents, and road closures. Automated tests helped ensure that the system could dynamically adjust traffic signals and reroute vehicles as needed.

- Bug Tracking Sheets: Maintained bug tracking sheets to log issues related to traffic signal timings, system integration with cameras and sensors, and data accuracy. Documented each bug’s severity, reproduction steps, and current resolution status.

- Test Cases Documentation: Created a comprehensive set of test cases to cover all aspects of the system, including traffic signal logic, vehicle detection, route optimization, and incident management. Compared expected outcomes with actual results to validate functionality.

- Performance Testing: Conducted stress and load testing to evaluate the system’s performance during high traffic volumes and adverse conditions. Measured response times for real-time data processing and adjustments to traffic controls.

- Integration Testing: Tested the integration of the Traffic Management System with external data sources such as road sensors, surveillance cameras, and traffic databases. Ensured seamless communication and accurate data synchronization across different modules.

- Environmental Condition Testing: Assessed system performance under different environmental conditions, including night-time operation, rain, fog, and heavy winds. Made necessary calibrations to maintain optimal traffic control in all weather scenarios.

- User Feedback Incorporation: Collected feedback from traffic operators and other stakeholders during pilot testing phases. Addressed suggestions regarding user interface improvements, system alerts, and dashboard usability.

- Reporting and Analysis: Produced detailed reports summarizing system performance metrics, bug trends, and effectiveness of traffic management strategies. Analyzed data to identify areas for further enhancement and to optimize traffic flow.

Experienced – Passionate – Dedicated – Professional

what people are saying

Explore testimonials from our valued clients. See how our commitment to excellence has positively impacted their experiences.

Eonix Technologies delivered an exceptional web application that exceeded our expectations. Their team showcased technical expertise and a keen understanding of our needs, producing a visually stunning and user-friendly product. We highly recommend Eonix Technologies for their commitment to quality and timely delivery.

Sarah Thompson, CTO of Greenfield Solutions

Eonix Technologies transformed our vision into a seamless web application. Their professionalism, attention to detail, and technical proficiency were impressive. The end product was intuitive, efficient, and exceeded our expectations. We highly recommend Eonix Technologies for top-notch web development services

David Martinez, CEO of InnovateTech